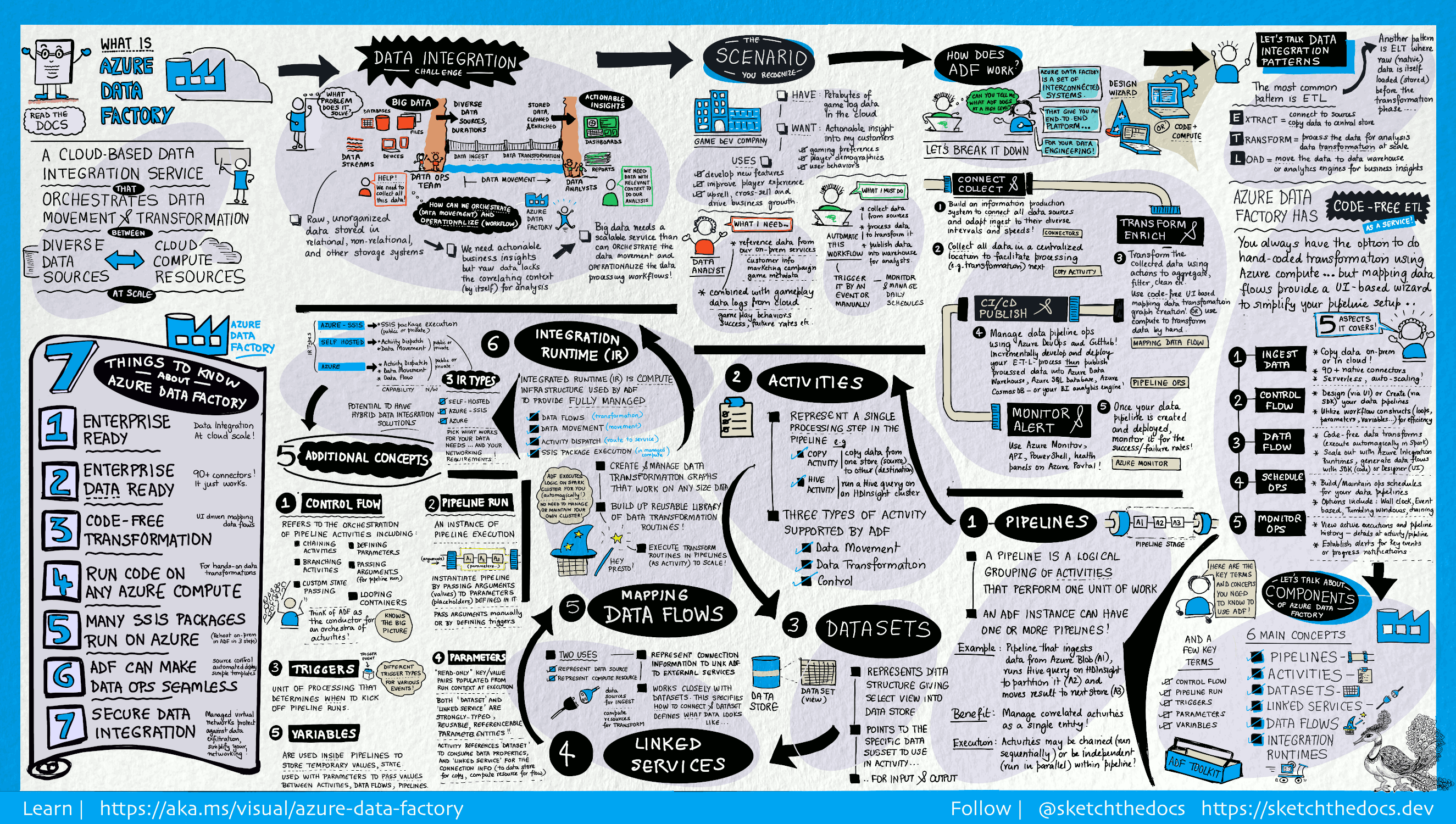

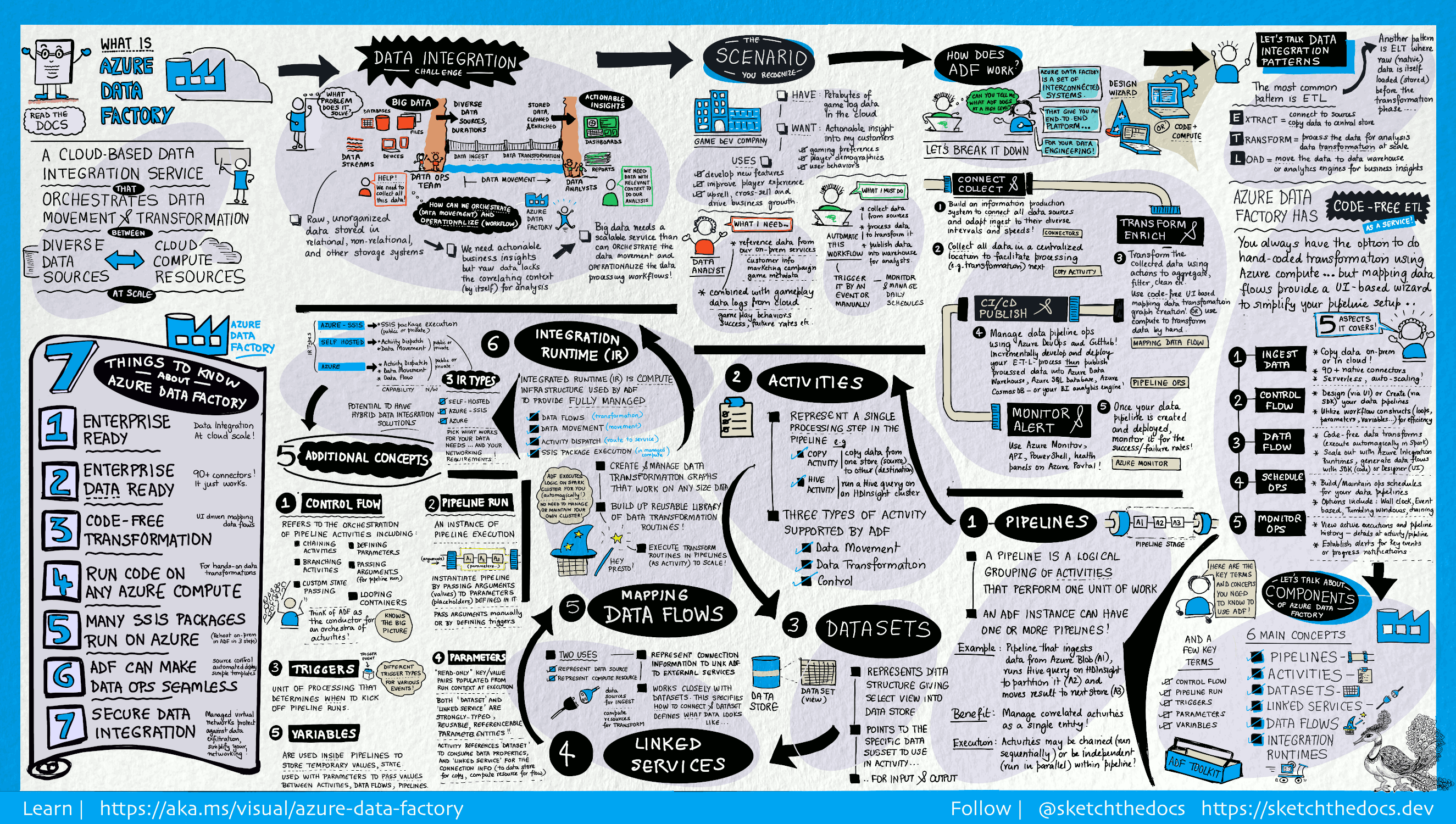

Azure Data Factory (ADF)

- Definition: A cloud-based data integration service THAT

orchestrates data movement and transformation BETWEEN

direct data sources compute resources

- How does it work?

Connect and collect

Transform and enrich

CI/CD publish

Monitor and alerts

- Most common “data integration pattern” = ETL (Extract/Transform/Load)

- ADF has code-free ETL as a service

- Option to do hard-coded transformation using Azure compute

- Mapping data flow provide a UI-wizard to simplify your pipeline setup (5 aspects)

- Ingest data

- Control flow

- Data flow

- Schedule ops

- Monitor ops

- Components:

Pipelines

Activities

Datasets

Linked Services

Dataflows

Integration Runtimes

- Key terms:

Control flow

Pipeline run

Triggers

Parameters

Variables

- PIPELINES: A logical grouping of ACTIVITIES that perform one unit of work

- ADF can have one of more pipelines

- ACTIVITIES: Represent a single “processing step” in the pipeline

- Three (3) types of activities supported by ADF:

- Data movement

- Data transformation

- Control

- DATASETS: Represent data structure giving selected view into data store

- Points to the specific data SUBSET to use in activity

- For input and output

- LINKED SERVICES (?)

- Mapping DATAFLOWS:

- Two (2) uses:

- Represent data source (for INGEST)

- Represent compute resource (for TRANSFORM)

- Represent connection information to link ADF to external services

- Works closely with datasets: This specifies HOW to connedt & datasets defines WHAT data looks like

- INTEGRATION RUNTIMES (IR):

- Compute infrastructure used by ADF to provide FULLY MANAGED:

- Dataflows

- Data movements

- Activity dispatch

- SSIS Package Execution

- Automatically manages Spart clusters

- Three (3) IR types:

- Azure SSIS (SQL Server Integration Services) package execution (public or private)

- Self hosted; Activity dispatch & Data movements (public or private)

- Azure; Activity dispatch & Data movements & Dataflows (public or private)

- Hybrid solution, depending on your networking needs

References

Diagram